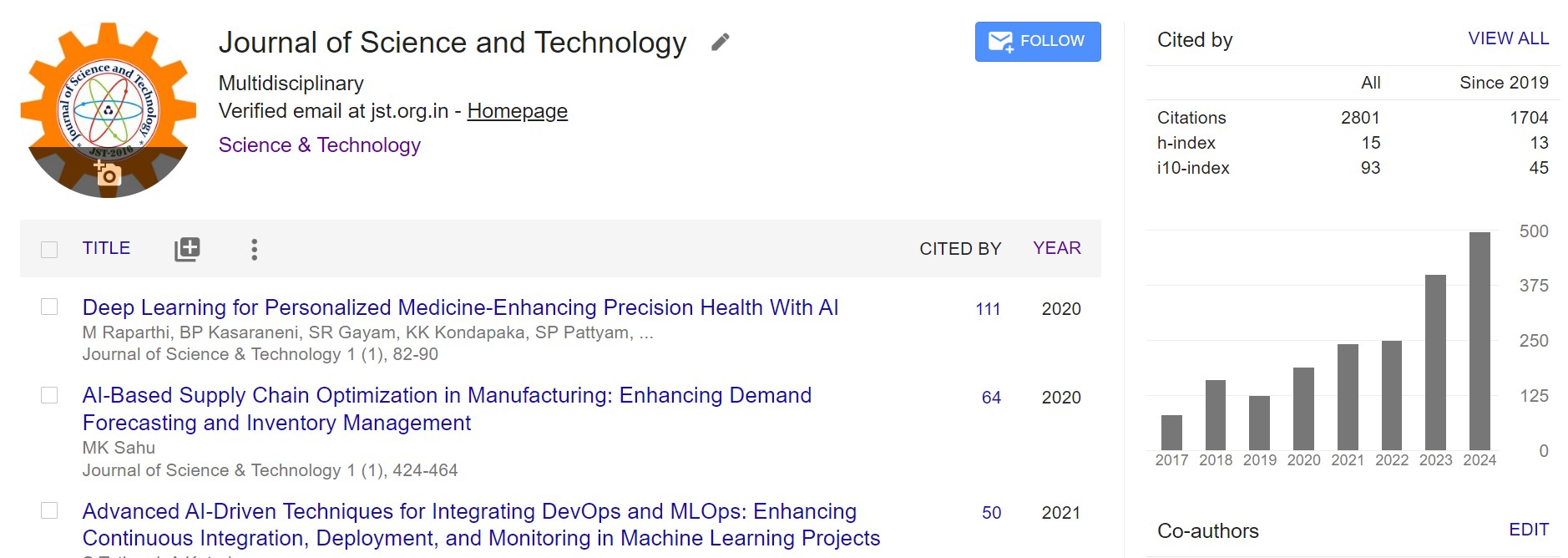

A Machine Learning Framework For Data Poisoning Attacks

DOI:

https://doi.org/10.46243/jst.2023.v8.i07.pp169-176Keywords:

Federated machine learning, Vulnerability, Arbitrary, Attack on federated machine learning(AT2FL), Gradients.Abstract

Federated models are built by collecting model changes from participants. To maintain the secrecy of the training data, the aggregator has no visibility into how these updates are made by design.. This paper aims to explore the vulnerability of federated machine learning, focusing on attacking a federated multitasking learning framework. The framework enables resource-constrained node devices, such as mobile phones and IOT devices, to learn a shared model while keeping the training However, the communication protocol among attackers may take advantage of various nodes to conduct data poisoning assaults, which has been shown to pose a serious danger to the majority of machine learning models. The paper formulates the problem of computing optimal poisoning attacks on federated multitask learning as a bi-level program that is adaptive to arbitrary choice of target nodes and source attacking nodes.The authors propose a novel systems-aware optimization method, Attack confederated Learning(AT2FL), which is efficiency to derive the implicit gradients for poisoned data and further compute optimal attack strategies in the federated machine learning.