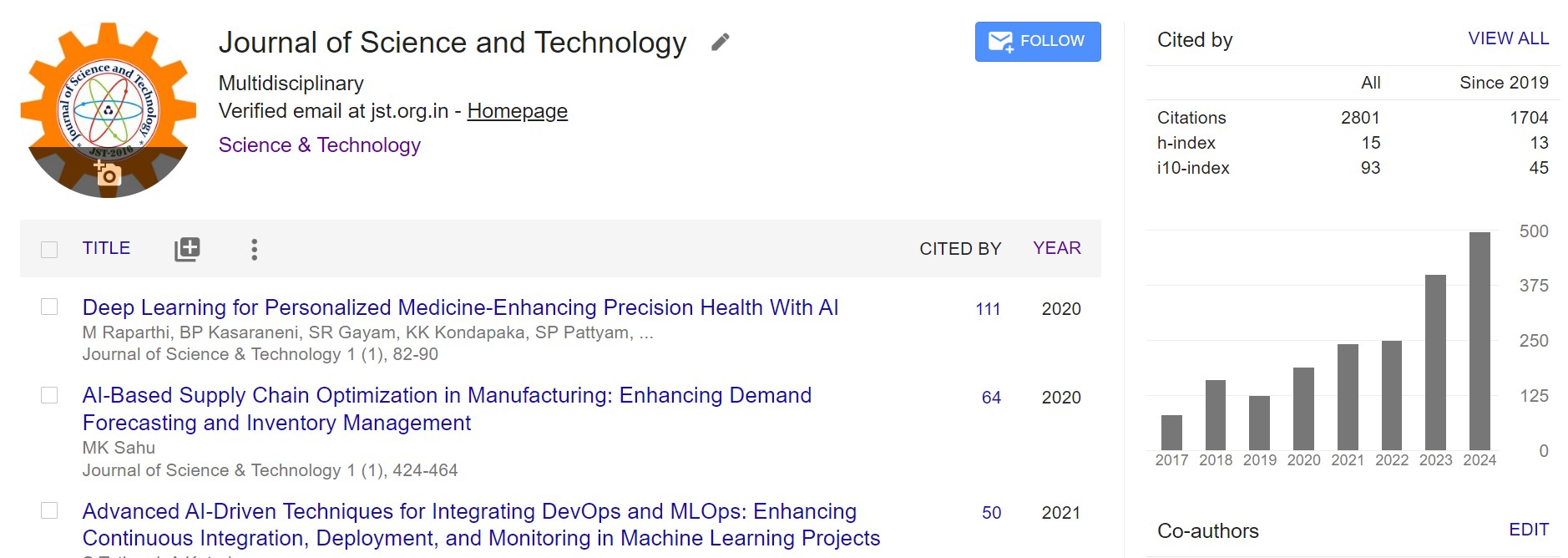

Bi-Modal Oil Temperature Forecasting in Electrical Transformers using a Hybrid of Transformer, CNN and Bi-LSTM

DOI:

https://doi.org/10.46243/jst.2023.v8.i05.pp49-56Keywords:

transformers, vision transformers, positional encoding, Multi-head attention, forecastingAbstract

Power consumption prediction is a tough task because of its fluctuating nature. If the expected demand is excessively high in comparison to the existing demand, the transformer may damage. Predicting the temperature of transformer oil is an efficient approach to verify the transformer's safety status. As a result, in this study, we offer a bimodal architecture for predicting oil temperature given a sequence of prior temperatures. Our model was tested using the Ettm1, Ettm2, and Etth1 datasets and achieved an RMSE of 0.41375, MAE of 0.3031 and MAPE of 8.292% on Ettm1 test dataset, an RMSE of 0.4105, MAE 0.3090 and MAPE of 6.678% on Ettm2 test dataset and an RMSE of 0.6762, MAE 0.4690 and MAPE of 11.23% on Etth1 test dataset.